LOS ANGELES—A Tesla involved in a fatal crash on a Southern California freeway last week was operating on Autopilot at the time, authorities said.

The May 5 crash in Fontana, a city 50 miles east of Los Angeles, is under investigation by the National Highway Traffic Safety Administration. The probe is the 29th case involving a Tesla that the agency has responded to.

A 35-year-old man was killed when his Tesla Model 3 struck an overturned semi on a freeway at about 2:30 a.m. The driver’s name has not yet been made public. Another man was seriously injured when the electric vehicle hit him as he was helping the semi’s driver out of the wreck.

The California Highway Patrol, or CHP, announced on Thursday that the car had been operating Tesla’s partially automated driving system called Autopilot, which has been involved in multiple crashes. The Fontana crash marks at least the fourth U.S. death involving Autopilot.

“While the CHP does not normally comment on ongoing investigations, the Department recognizes the high level of interest centered around crashes involving Tesla vehicles,” the agency said in a statement. “We felt this information provides an opportunity to remind the public that driving is a complex task that requires a driver’s full attention.”

The federal safety investigation comes just after the CHP arrested another man who authorities have said was in the back seat of a Tesla that was driving this week on Interstate 80 near Oakland with no one behind the wheel.

CHP has not said if officials have determined whether the Tesla in the I-80 incident was operating on Autopilot, which can keep a car centered in its lane and a safe distance behind vehicles in front of it.

But it’s likely that either Autopilot or “Full Self-Driving” were in operation for the driver to be in the back seat. Tesla is allowing a limited number of owners to test its self-driving system.

Tesla, which has disbanded its public relations department, did not respond Friday to an email seeking comment. The company says in owner’s manuals and on its website that both Autopilot and “Full Self-Driving” are not fully autonomous and that drivers must pay attention and be ready to intervene at any time.

Autopilot at times has had trouble dealing with stationary objects and traffic crossing in front of Teslas.

In two Florida crashes, from 2016 and 2019, cars with Autopilot in use drove beneath crossing tractor-trailers, killing the men driving the Teslas. In a 2018 crash in Mountain View, California, an Apple engineer driving on Autopilot was killed when his Tesla struck a highway barrier.

Tesla’s system, which uses cameras, radar, and short-range sonar, also has trouble handling stopped emergency vehicles. Teslas have struck several fire trucks and police vehicles that were stopped on freeways with their flashing emergency lights on.

For example, the National Highway Traffic Safety Administration in March sent a team to investigate after a Tesla on Autopilot ran into a Michigan State Police vehicle on Interstate 96 near Lansing. Neither the trooper nor the 22-year-old Tesla driver was injured, police said.

After the Florida and California fatal crashes, the National Transportation Safety Board recommended that Tesla develop a stronger system to ensure drivers are paying attention, and that it limits use of Autopilot to highways where it can work effectively. Neither Tesla nor the safety agency took action.

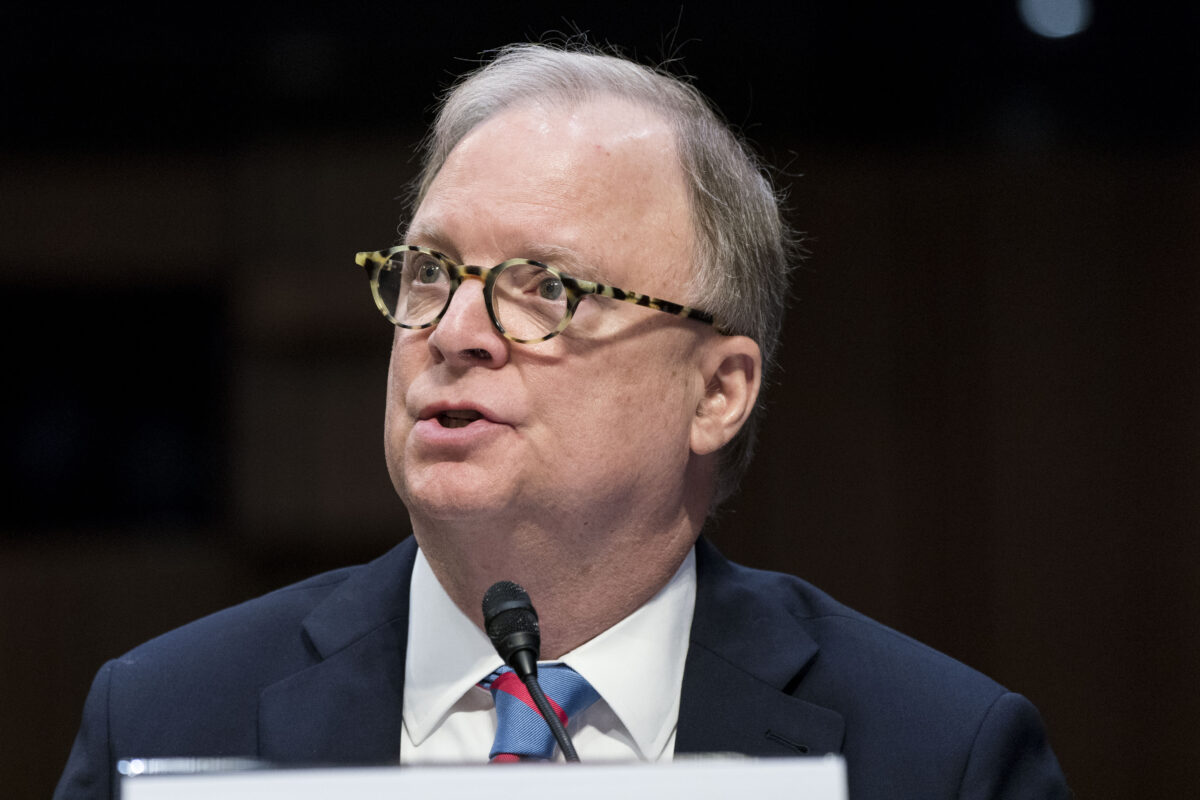

In a Feb. 1 letter to the U.S. Department of Transportation, NTSB Chairman Robert Sumwalt urged the department to enact regulations governing driver-assist systems such as Autopilot, as well as testing of autonomous vehicles. NHTSA has relied mainly on voluntary guidelines for the vehicles, taking a hands-off approach so it won’t hinder the development of new safety technology.

Sumwalt said that Tesla is using people who have bought the cars to test “Full Self-Driving” software on public roads with limited oversight or reporting requirements.

“Because NHTSA has put in place no requirements, manufacturers can operate and test vehicles virtually anywhere, even if the location exceeds the AV (autonomous vehicle) control system’s limitations,” Sumwalt wrote.

He added: “Although Tesla includes a disclaimer that ‘currently enabled features require active driver supervision and do not make the vehicle autonomous,’ NHTSA’s hands-off approach to oversight of AV testing poses a potential risk to motorists and other road users.”

NHTSA, which has the authority to regulate automated driving systems and seek recalls if necessary, seems to have developed a renewed interest in the systems since President Joe Biden took office.

By Stefanie Dazio and Tom Krisher

Be the first to comment